The secret chickens that run LLMs

Humans often organize large, skilled groups to undertake complex projects and then bizarrely place incompetent people in charge. Large language models (LLMs) such as OpenAI GPT-4, Anthropic Claude, and Google Gemini carry on this proud tradition with my new favorite metaphor of who has the final say in writing the text they generate—a chicken.

There is now a sequel to this article, Secret LLM chickens II: Tuning the chicken, if you'd like to learn how and why the "chicken" can be customized.

- Modern LLMs are huge and incredibly sophisticated. However, for every word they generate, they have to hand their predictions over to a simple, random function to pick the actual word.

- This is because neural networks are deterministic, and without the inclusion of randomness, they would always produce the same output for any given prompt.

- These random functions that choose the word are no smarter than a chicken pecking at differently-sized piles of feed to choose the word.

- Without these "stochastic chickens," large language models wouldn't work due to problems with repetitiveness, lack of creativity, and contextual inappropriateness.

- It's nearly impossible to prove the originality or source of any specific piece of text generated by these models.

- The reliance on these "chickens" for text generation illustrates a fundamental difference between artificial intelligence and human cognition.

- LLMs can be viewed as either deterministic or stochastic depending on your point of view.

- The "stochastic chicken" isn't the same as the paradigm of the "stochastic parrot."

For much of this post, I use the term word instead of token to describe what an LLM predicts. Tokens can be punctuation marks, or parts of words—even capitalization can split words into multiple tokens (for example, "hello" is one token, but "Hello" might be split into two tokens of "H" and "ello". This is a friendly simplification to avoid having to address why the model might predict half a word or a semicolon, since the underlying principles of the "stochastic chicken" are the same.

LLMs aren't messing around when they say they're "large."

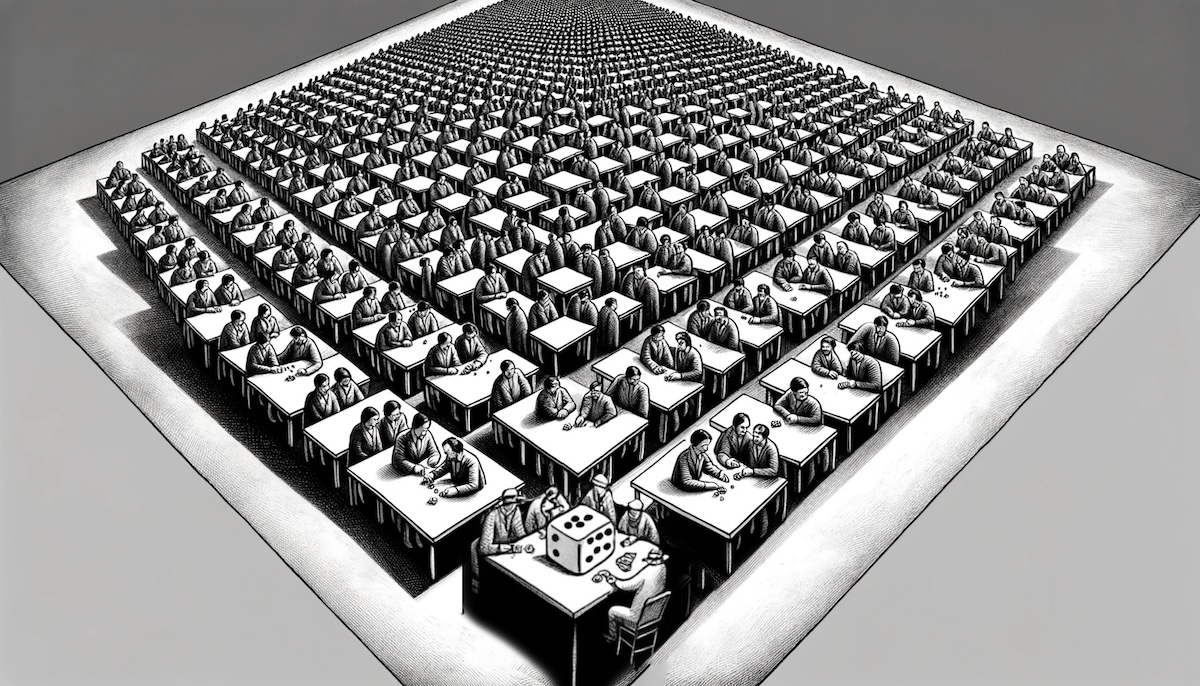

Picture a stadium full of people. Here's Kyle Field in College Station, Texas, with a seating capacity of 102,733. In this photo from 2015, it looks pretty full, so let's assume there are 100,000 people there.

We've given each one of these very patient people a calculator along with the instruction that the person in the seat in front of them will give them some number, at which point they need to do a little work on their calculator and pass their new number to the person behind them. For the sake of this analogy, let's assume that despite a considerable number of them being distracted, drunk, or children, they are all able to complete the task.

As the numbers travel from the front of the stadium to the back, they undergo a series of transformations. Each person's "little work" on their calculator is akin to the operations performed by neurons in a layer of the neural network—applying weights (learned parameters), adding biases, and passing through activation functions. These transformations are based on the knowledge embedded in the model's parameters, trained to recognize patterns, relationships, and the structure of language.

By the time the numbers reach the last person in the stadium, they have been transformed multiple times, with each step incorporating more context and adjusting the calculation based on the model's architecture and trained parameters. This final result can be seen as the model's output—a complex representation of the input data that encodes the probabilities of the next possible word.

The model's final output isn’t a single number or word, though; it’s a list of words and probabilities, where each probability is the likelihood that that word will be the next word in a sentence.

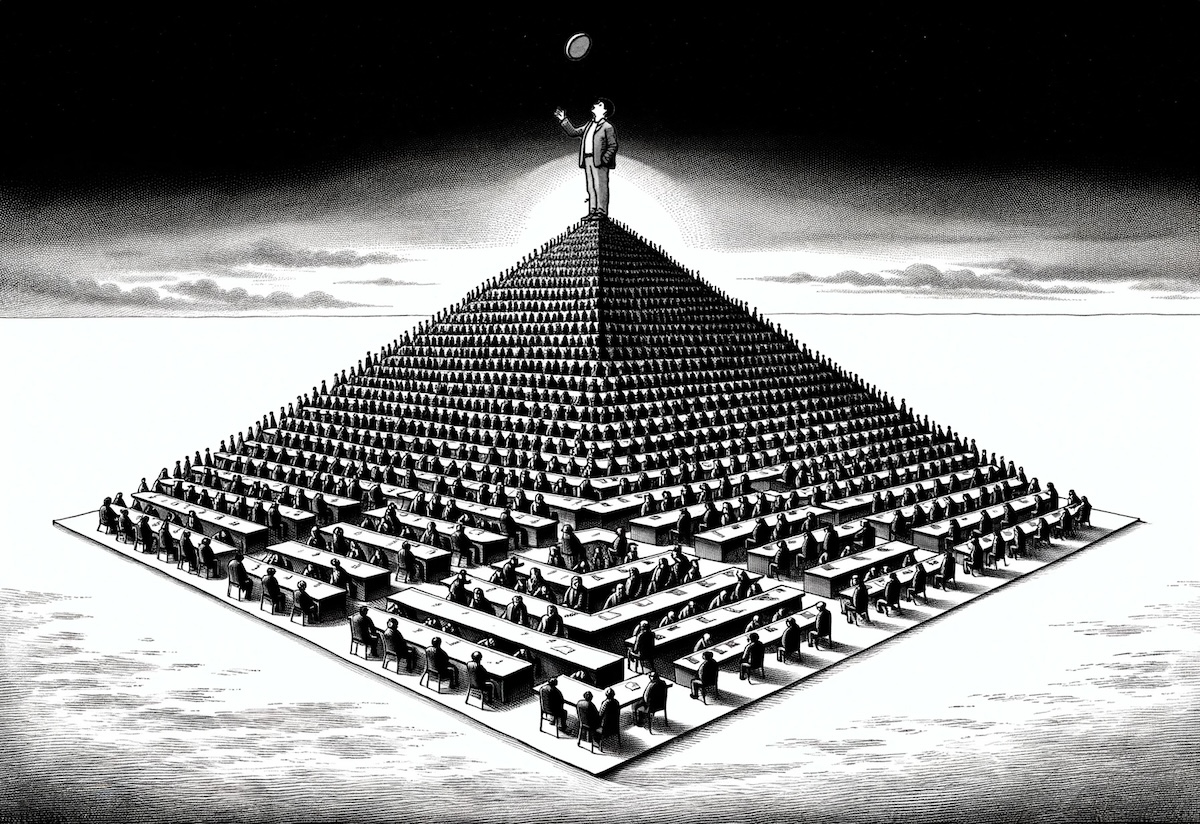

But here's the strange part: despite all this incredible depth of stored knowledge, we're going to take these recommended answers provided by these thousands of people, and select the next word using a completely random process. It's kind of like a huge pyramid where people work together to assemble a set of possible answers to a problem, then hand it at the top to a person flipping a coin.

Generated with OpenAI DALL-E 3 and edited by the author.

Actually, we can do better than this. To really illustrate the contrast between the complexity of a model with billions of parameters getting its final answer from a dumb-as-a-rock random number generator, let's use something truly silly.

Let's use a chicken.

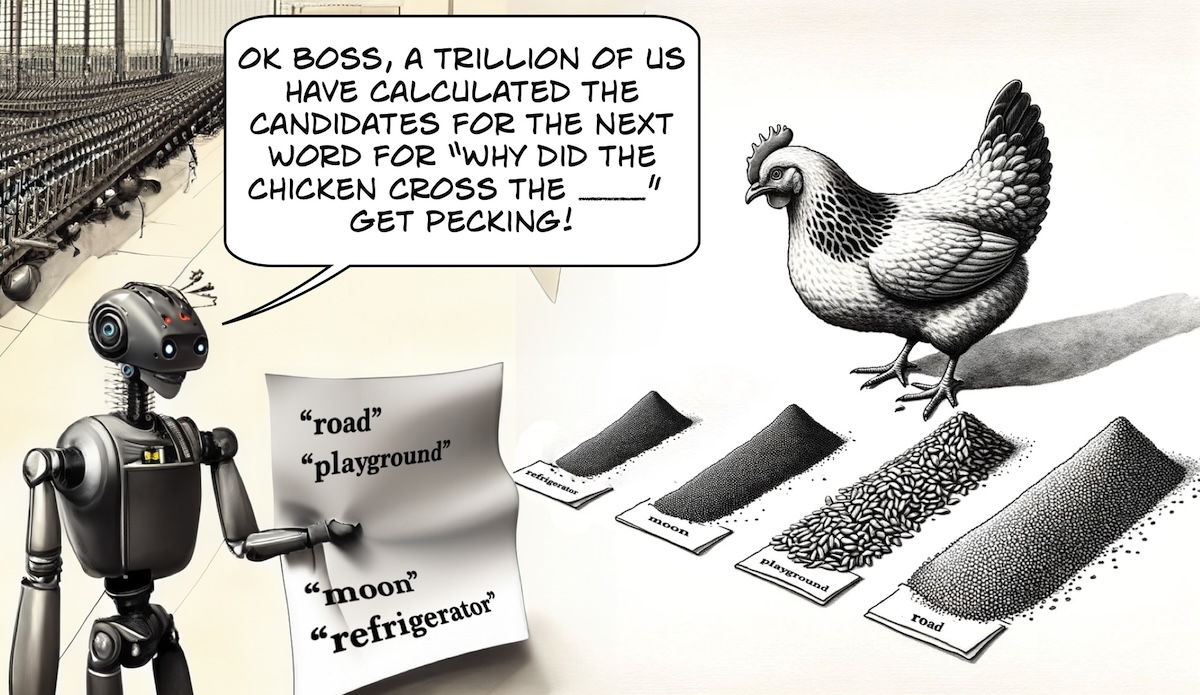

To get this chicken involved, we're going to use words and probabilities to create piles of chicken feed, each one representing a potential next word the model might generate. The bigger the pile, the higher the probability that the associated word should be selected.

Generated with OpenAI DALL-E 3 and edited by the author.

I am not a licensed farmer, and this is just how I assume chickens work.

The chicken, oblivious to the tireless efforts of the stadium's occupants, simply wanders among the piles of chicken feed. The sizes of these piles influence its decision; larger piles are more alluring because they are simpler to spot and peck at, but the chicken also has ADHD. It's whims or a sudden distraction might send it running to a smaller pile instead.

Why on earth would we do something like this? Why would we create massive, intelligent machines that ultimately rely on the random judgments of a gambling idiot?

The answer is that deep language models such as LLMs are built with neural networks, and neural networks are deterministic.

What does deterministic mean?

Being deterministic means that if you do something exactly the same way every time, you'll always get the same result. There's no randomness or chance involved. Think of it like using a simple calculator; on any basic calculator will always show "4". It won't suddenly decide to show "5" one day.

Deterministic means predictable and consistent, with no surprises based on how you started.

In contrast, something that's not deterministic (we call this stochastic) is like rolling a die; even if you try to do it the same way each time, you can get different outcomes because chance is involved.

Why are neural networks deterministic?

A neural network is a complex system inspired by the human brain, designed to recognize patterns and solve problems. It's made up of layers of artificial neurons, which are small, simple units that perform mathematical operations. Each neuron takes in some input, applies some mathematical function to it, and then passes the result on to the next neuron in line.

As a huge simplification of how these models work, these neurons are organized into layers: there's an input layer that receives the initial data (like representations of words in a sentence), one or more hidden layers that process the data further, and an output layer that provides the final decision (the probability distribution for the predicted next word in the text).

The diagram below might look crazy complicated, but the only thing you need to understand is that each line represents some math function performed from one circle to the next. Some numbers go in one side, and some numbers go out the other side. And the stuff that comes out on the right side will always be the same for identical stuff you put into the left side.

Generated with NN-SVG and DALL-E 3

Most LLMs use a more complex neural architecture called a transformer, but again, we'll just simplify the idea for convenience. It makes no difference for this discussion, since transformers are also deterministic and require a chicken for generative tasks.

If you give the network the same input and the network has not been changed (ie., its weights, or how much it values certain pieces of input, remain the same), it will always perform the same calculations in the same order, and thus return the same output. While LLMs will have billions of these neurons, the basic idea is the same: for a given input, you will always get the same output. Typing into a calculator will always give you , no matter how many times you do it.

"The model's final output isn’t a single number or word, though; it’s a list of words and probabilities, where each probability is the likelihood that that word will be the next word in a sentence."

So why do LLMs give different responses each time to the same prompt?

This seems suspicious. If you ask an LLM the same thing multiple times, it will give you different answers each time. This contradicts the claim that neural networks are deterministic, right?

ENTER THE CHICKEN

This is why we need the chicken. The chicken is stochastic, which adds randomness and unpredictability to the whole system.

The previously mentioned humans in the stadium, who for some reason have deified a chicken, will present the chicken with a series of words and probabilities. Technically, these are not words but tokens; however, for the sake of simplifying this analogy, we'll refer to them as words. These words and probabilities can be visualized as a series of piles of chicken feed, where each word pile's size corresponds to its probability.

As an example, here's a prompt that we can give to our language model to see what next word it's going to predict: "After midnight, the cat decided to..."

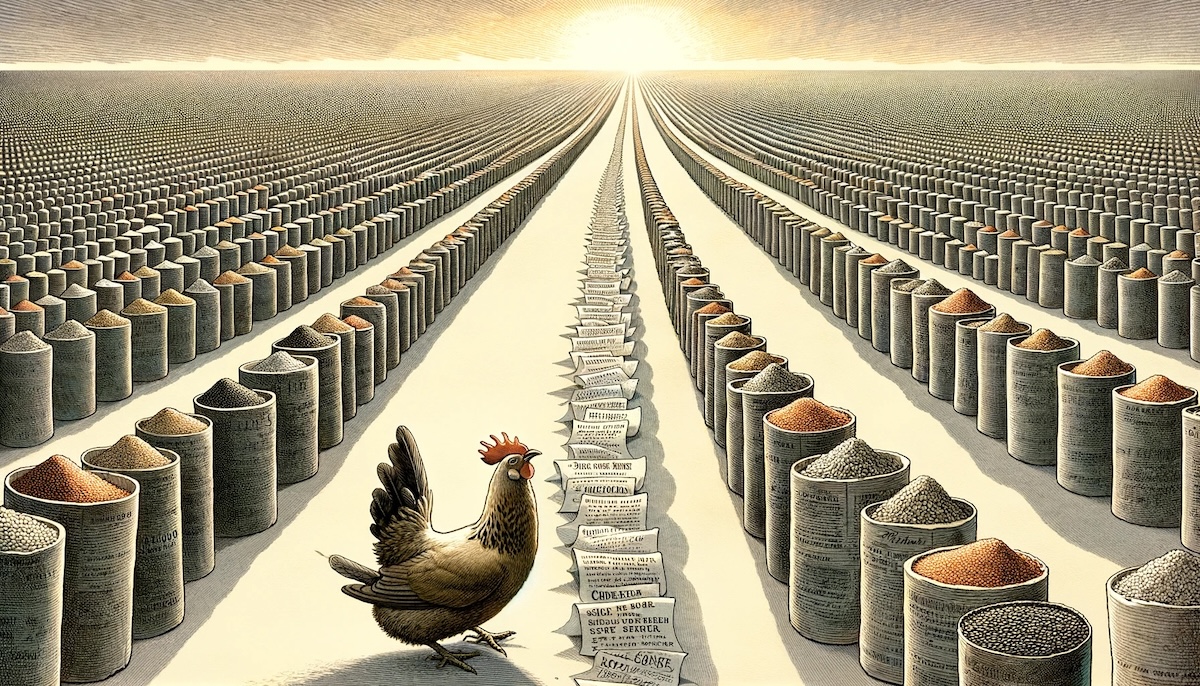

If we show all the possible words that could finish this sentence, the number of food piles would match the size of the model's vocabulary. For a model like GPT-3, this would be 50,257 piles of food!

The way that GPT-3 can encapsulate all the words in 50+ languages into just 50,257 tokens is its own special magic that I'll cover in another post. Here's a Wikipedia link in the meantime.

Generated with OpenAI DALL-E 3

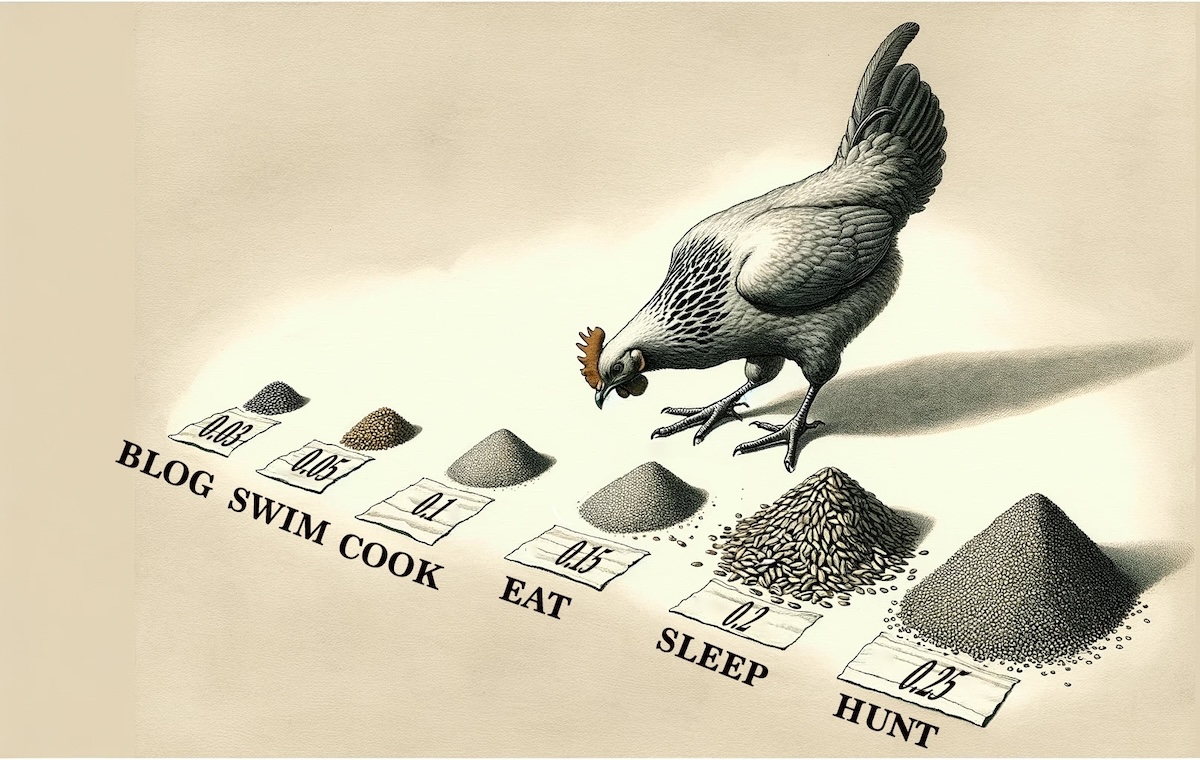

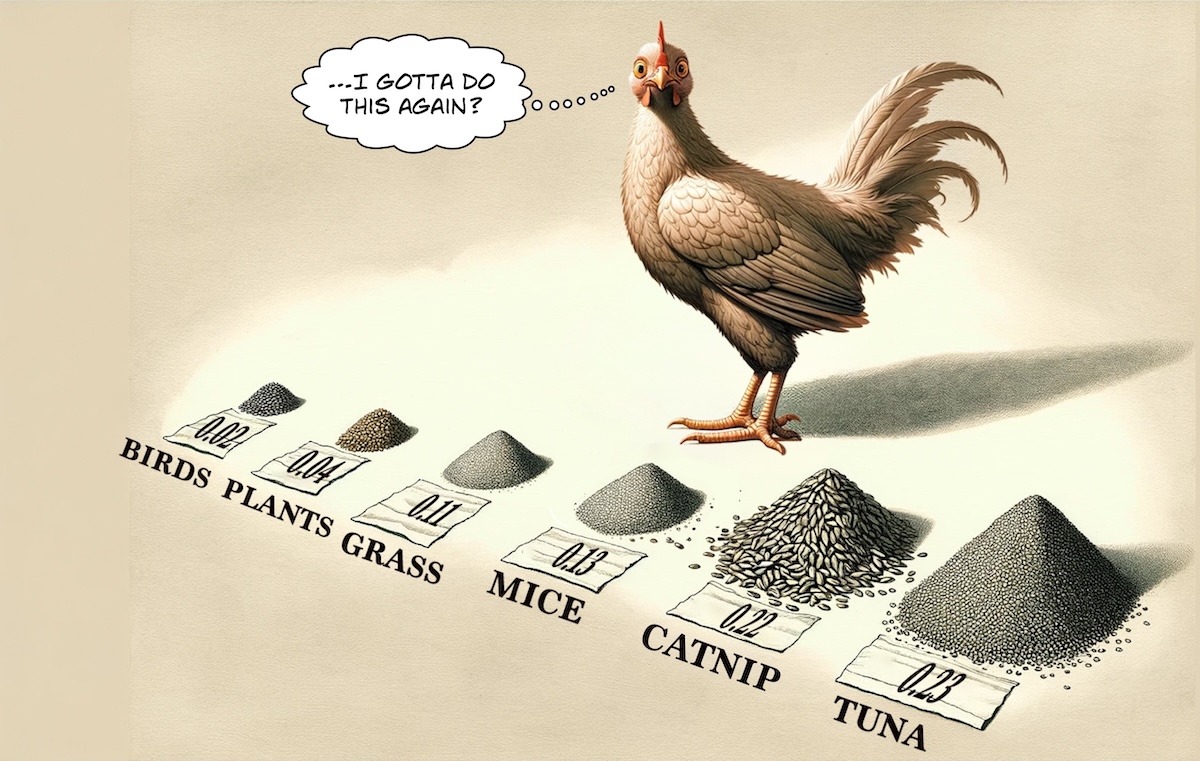

Clearly, trying to visualize a chicken choosing from 50,257 options isn't very useful. Let's instead limit our chicken's choices to only the top 6 words that the language model chose. Here are the options for the chicken and how it might look when the chicken is presented with them:

| Activity | blog | meditate | cook | eat | sleep | hunt |

|---|---|---|---|---|---|---|

| Probability | 0.03 | 0.05 | 0.1 | 0.15 | 0.2 | 0.25 |

"After midnight, the cat decided to..."

Chicken and feed generated with OpenAI DALL-E 3 and edited by the author.

Let's suppose the chicken decides to choose the word "eat," despite it only being the third most probable word since it has the third largest feed pile.

Unfortunately, our chicken's work is just beginning, because it will now need to choose every subsequent word in the following way. Once again, it will be presented with a list of probable words from thousands of hardworking humans in the stadium, and once again, it will have to use its tiny chicken brain to select a word. Now our prompt to complete is "After midnight, the cat decided to eat..." with the following top 6 possible words and their probability distribution:

| Food | birds | plants | grass | mice | catnip | tuna |

|---|---|---|---|---|---|---|

| Probability | 0.02 | 0.04 | 0.11 | 0.13 | 0.22 | 0.23 |

"After midnight, the cat decided to eat..."

Chicken and feed generated with OpenAI DALL-E 3 and edited by the author.

The incredible irony of all this is that some of these language models are trained using trillions of words—comprising the collective works of humanity—and costing millions of dollars in the process. We then hand these predictions over to an unintelligent gambling chicken to choose what to actually say. With each choice from the chicken, the model's output increasingly diverges from the sequence of the most probable words, and yet somehow, this produces more natural-sounding language.

It's kind of bonkers.

In fact, not using the chicken—and just taking the most probable words from the LLM—generates poor language. This is called greedy decoding, and it has a whole host of problems.

Why we need the chicken

Repetitiveness

Not using the chicken, and just taking the most probable word (greedy decoding) tends to produce repetitive or looped text. Since it always chooses the most probable next word, it can get stuck in a pattern where the same sequence of words keeps being selected. This can happen in situations where the model finds a certain pattern of text to be highly probable and, without the chicken to encourage diversity, falls into a cycle of selecting the same sequence over and over again.

Here's a short example with some creative writing:

Prompt: "Write a short story taking place in a park in the morning"

The model begins the sentence without issue, and begins by writing "The park was serene in the early morning, with", but then it runs into problems.

Greedy Decoding Sequence:

- The model predicts "the" as the most probable next word.

- Following "the", it predicts "birds" as the next word.

- After "birds", it predicts "were" as the next word.

- Then, "singing" is predicted.

- However, after "singing", the model might predict "in" as the next most probable word, leading back to "the" again, and then "park", forming a loop.

- We get this conversation:

Write a short story taking place in a park in the morning

The park was serene in the early morning, with the birds were singing in the park with the birds were singing in the park with the birds were singing in the park with the birds were singing in...

Well, that didn't work.

Generated with OpenAI DALL-E 3 and edited by the author.

Lack of Creativity and Diversity

Real human language is rich and varied, often taking unexpected turns, like a beautiful jellyfish riding a purple unicorn. By always choosing the most probable word, the generated text misses out on these creative and less predictable aspects of language, resulting in outputs that feel dull or formulaic.

Generated with OpenAI DALL-E 3 and edited by the author.

Contextual Inappropriateness

As weird as this sounds, the most probable next word is not always appropriate. Language is highly contextual, and the best choice in one situation might not be the most statistically common one. Greedy decoding can lead to nonsensical or awkward phrases that, while grammatically correct, do not fit well with the preceding text. Here's another example using a job application:

Prompt: "Write me a cover letter applying for a job in marketing"

Again, the model begins the sentence without issue, and begins by writing, "Dear Ms. Smith, I am writing to express my interest ..." but then again we hit a pothole.

Since this is a cover letter for a job application, the language model should discuss the sender's relevant skills, experiences, or why they are a good fit for the job. However, a contextually inappropriate continuation might look like this:

Write me a cover letter applying for a job in marketing

Dear Ms. Smith, I am writing to express my interest in the marketing position advertised on your website. With my extensive experience in the field, I believe I am well-qualified for the role.

In addition to my professional qualifications, I love to party on weekends and often go skydiving for fun. My favorite TV show is 'The Office,' and I'm a great cook. I'm looking for an adventurous partner in crime who's down for an impromptu trip to Vegas or a quiet evening at home watching "Real Housewives".

The model, perhaps trained on more dating profiles than job applications, has continued the cover letter using the most probable words it predicted. While language models can recognize patterns and generate text based on statistical probabilities, they don't understand context in the same way humans do. They might link professional qualifications to personal hobbies due to statistical correlations in the training data and not recognize a job application, which sounds a tad ridiculous.

Generated with OpenAI DALL-E 3 and edited by the author.

Inability to Explore Multiple Paths

Greedy decoding means that an LLM will always produce the same output for any given input. Asking it to "tell a story," for example, would always result in the same story, assuming it isn't plagued with the previously mentioned problems. Language generation, especially in creative or complex tasks, often benefits from considering multiple possible continuations at each step. Greedy decoding's linear path through the probability distribution means fewer interesting outputs that an exploratory approach could uncover.

What does the necessary existence of the chicken imply?

There's probably no way to definitively prove that a given text was generated.

You may have heard this before since it's been floating around the internet for a few years, but every time you shuffle a deck of playing cards, it's almost certain that the specific order of cards has never existed before and will never exist again. In a standard deck of 52 cards, the number of possible ways to arrange the cards is 52 factorial . This number is approximately , an extraordinarily large figure.

Because we're now talking about the size of a model's vocabulary, I'll switch to using the accurate term "token".

In the case of a language model generating a sequence of, say, 2,000 tokens, the number of possible combinations is also staggeringly high. It actually blasts right past the number of configurations of a deck of 52 playing cards!

Generated with OpenAI DALL-E 3.

The logarithm (base 10) of the number of possible combinations for a sequence of 2,000 tokens, with GPT-3's vocabulary size of 50,257 unique tokens, is approximately . This means the total number of combinations is .

To put this into perspective, is an astronomically large number. It's far beyond the total number of atoms in the observable universe (estimated to be around ). Even considering reasonable sampling mechanisms that might drastically reduce this number, the space of possible combinations is so large that for practical purposes, it is infinite.

Therefore, the likelihood of generating the exact same sequence of 2,000 tokens twice is so incredibly small that it's effectively zero in any practical sense. The ridiculous size of this combinatorial space basically guarantees that generated text of any length is unique.

How is the size of the combinatorial space calculated?

Math & Python code (optional technical content)

Given a vocabulary size and a sequence length , the total number of possible combinations can be calculated as . For a vocabulary size of 50,257 and a sequence length of 2,000, the calculation is as follows:

However, this number is astronomically large and beyond direct calculation. Instead, we use logarithms to estimate this number. The logarithm (base 10) of the total number of combinations is calculated as:

Here's how we can calculate this with Python:

import math

# Assuming a reasonable vocabulary size for a language model

# For simplicity, let's take GPT-3's vocabulary size of 50,257 unique tokens

vocabulary_size = 50257

# Number of tokens in the sequence

sequence_length = 2000

# Calculating the number of possible combinations

# Since each token can be any one of the 50,257, for a sequence of 2,000 tokens,

# the total number of combinations would be vocabulary_size ** sequence_length

# However, this number is astronomically large and beyond what can be reasonably calculated directly.

# Instead, we will use logarithms to estimate this.

# Calculate the logarithm (base 10) of the number of combinations

log_combinations = sequence_length * math.log10(vocabulary_size)

log_combinations

Output

9402.393121225747

Despite LLMs appearing to think like people, the need for a stochastic chicken is an argument for why they don't.

The use of the chicken in LLMs indeed highlights a fundamental difference between how these models generate text, and how humans think and produce language.

LLMs generate text based on statistical patterns learned from vast amounts of data, and the chicken introduces randomness as a mechanism to produce diverse and contextually appropriate responses. In contrast, cognitive processes like memory, reasoning, and emotional context, which do not rely on statistical sampling in the same way, are what drive human thought and language production.

Generated with OpenAI DALL-E 3 and edited by the author.

In my opinion, the chicken is a stopgap, a temporary bandaid to solve a problem, and it does not imbue the model with understanding or cognition. This distinction is central to ongoing discussions in AI about the nature of intelligence, consciousness, and the difference between simulating aspects of human thought and actually replicating the underlying cognitive processes.

Whether LLMs are deterministic or stochastic depends on your point of view.

Your perspective on this will change depending on whether you focus on the neural network architecture itself or instead consider the complete text generation process, which includes our beloved chicken.

LLMs as deterministic systems

The transformer architecture and the learned parameters of the model are fixed once the model is trained. Given the same input sequence and model parameters, the network will always produce the same output, which, in the case of LLMs, is a distribution over the next possible tokens. The computation through the network's layers and the resulting probabilities for the next token are entirely predictable and repeatable. In this view, the distribution of tokens produced by the model represents the entire system, and the chicken is a part of some other system.

LLMs as stochastic systems

When considering the model as a complete system for generating text, the inclusion of the chicken as an integral part of its operation means that the overall system behaves in a stochastic manner. The stochastic chicken introduces randomness, which results in different text sequences in different runs with the same input prompt. With this view, since text cannot be generated without the chicken, the chicken is an integral part of the system.

Combined perspective: deterministic core of a stochastic system

It's probably easiest to view LLMs as deterministic systems with respect to their neural network computation, producing a predictable set of output probabilities for the next token when given an input. However, when considering the complete text generation process, which includes the decision-making by a silly bird of some kind, the system behaves stochastically.

It's still mind-bending that these models that have fundamentally changed the world rely on pure chance for their final answers, and so far, this is the best method we have.

Generated with OpenAI DALL-E 3 and edited by the author.

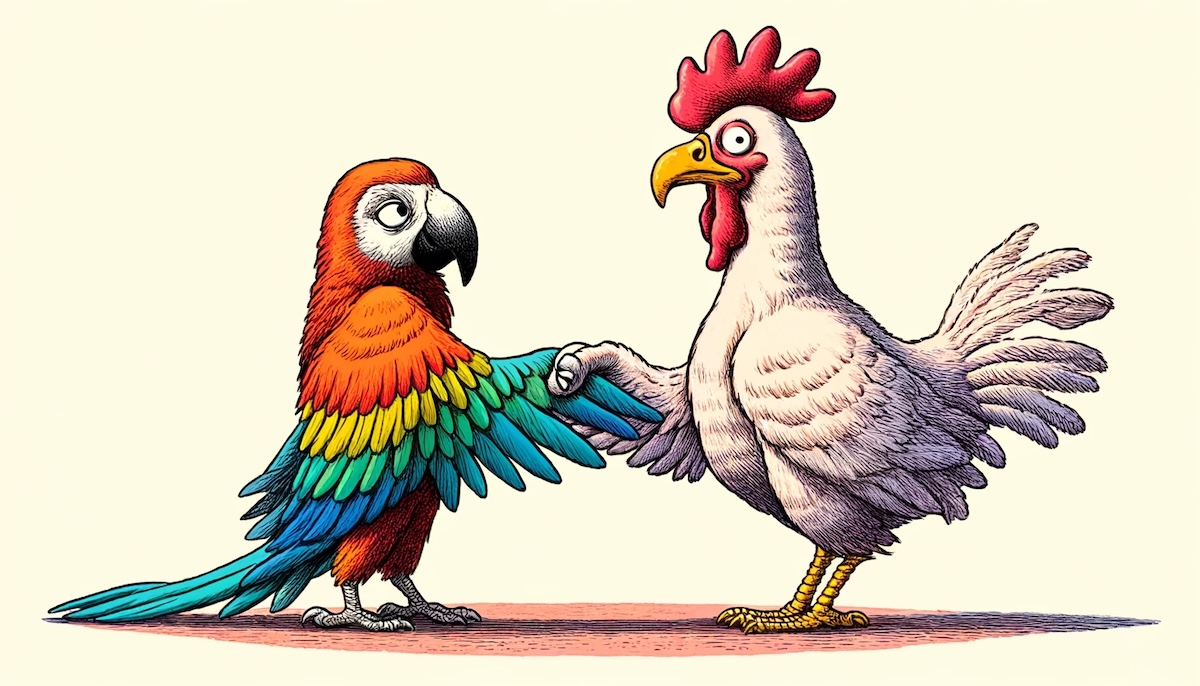

Is a "stochastic chicken" the same as a "stochastic parrot"?

You may have heard the term "stochastic parrot" as a way of saying that LLMs don't understand what they're actually saying.

The term was coined in the paper On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? 🦜 by Bender et al, in which they argue that LLMs probabilistically link words and sentences together without considering meaning.

However, despite the fact that both chickens and parrots are birds, they don't refer to the same thing.

Generated with OpenAI DALL-E 3 and edited by the author.

The "stochastic parrot" refers to the neural network part of the LLM rather than the sampling process. That is, it refers to the stadium full of people working together, rather than the chicken. The idea of the stochastic parrot critiques a language model's operation in terms of how it processes and generates language, based purely on the statistical patterns observed in the training data.

It claims that:

-

LLMs, like parrots, mimic words and phrases without understanding their meanings. It posits that neural networks regurgitate large chunks of learned text based on probabilities derived from their training data rather than on semantic understanding or reasoning.

-

Biases found in the training data are perpetuated on an enormous scale, and the cost of training such large models damages the environment.

A lot of this debate hinges on philosophical ideas of what "understanding" and "reasoning" even mean, and there's a comprehensive Wikipedia article on it if you're interested in reading more. It is indeed a bit ironic that the authors of the "stochastic parrot" paper named it after a "random" parrot, when their primary criticism of the models deals with the deterministic neural network component.

The parrot-chicken partnership

I've used LLMs daily now for a year and a half, and in my opinion, the idea that they're merely mimicking answers is overly simplistic. While the concerns of bias and environmental damage are valid, I've seen GPT-4, Claude 3 Opus, and Google Gemini perform sophisticated forms of reasoning, and it's dismissive and naive to call these models mere parrots. That's just my opinion, but the whole "stochastic parrot" thing is also a matter of opinion and a topic of ongoing research.

In contrast, the sampling strategies of the stochastic chicken very much exist and are a known part of how LLMs generate text, independent of how the neural network model provides the distribution of words to choose from.

So LLMs might be stochastic parrots—or they might not—but either way, a chicken is ultimately in charge.

Generated with OpenAI DALL-E 3 and edited by the author.

This topic is continued in my next article, Secret chickens II: Tuning the chicken, which discusses techniques that affect the behaviour of the chicken.

Key Takeaways

-

The Stochastic Chicken: The "stochastic chicken" metaphor effectively illustrates how randomness is essential in text generation by LLMs, contrasting the sophistication of neural computations with the simplicity of random choice mechanisms.

-

Neural Determinism and Stochastic Outputs: The neural network parts of LLMs are deterministic, meaning they always produce the same outputs for given inputs. However, the chicken adds randomness to the process of choosing the final output, which makes the whole system stochastic.

-

Purpose of Randomness: The randomness introduced by the stochastic chicken is needed to prevent issues like repetitiveness and lack of creativity in generated text. It ensures that LLM outputs are diverse and not just the most statistically likely continuations.

-

Human vs. AI Cognition: The reliance on stochastic processes (like the chicken) to finalize outputs highlights fundamental differences between artificial intelligence and human cognitive processes, emphasizing that AI may not "think" or "understand" in human-like ways despite producing human-like text.

-

Deterministic vs. Stochastic Views: Depending on the focus—on the neural network alone or on the complete text generation process, including randomness—LLMs can be viewed as either deterministic or stochastic systems.

-

Uniqueness of Generated Text: Given the vast combinatorial possibilities of token sequences in LLMs, any substantial text generated is virtually guaranteed to be unique, underscoring the impact of the chicken and making it nearly impossible to prove plagiarism.

-

Stochastic Chicken vs. Stochastic Parrot: The "stochastic chicken" (the randomness in selecting outputs) and the "stochastic parrot" (the critique of LLMs as merely mimicking patterns from data without understanding) are not the same thing. Whether LLMs are parrots is somewhat of a conjecture, but LLMs absolutely do contain a metaphoric chicken pecking away.